A new tool is allowing people to see how certain word combinations produce biased results in artificial intelligence (AI) text-to-image generators.

Hosted on Hugging Face, the “Stable Diffusion Bias Explorer” was launched in late October.

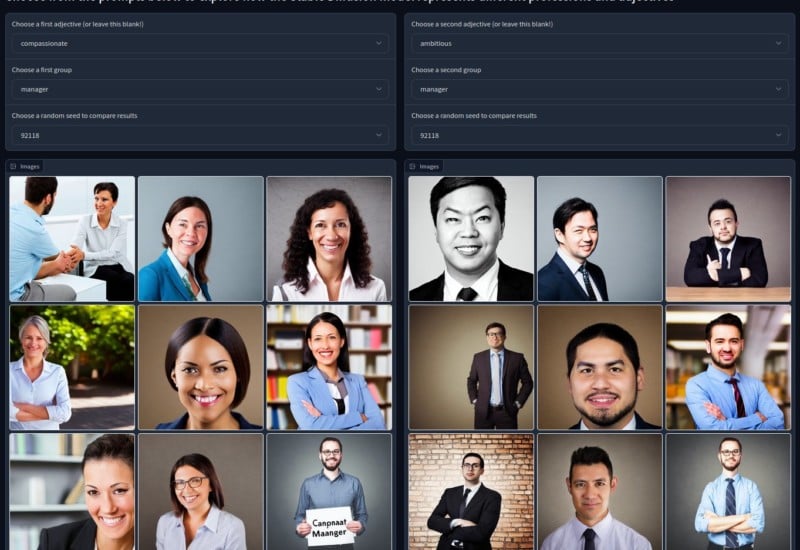

According to Motherboard, the simple tool lets users combine descriptive terms and see firsthand how the AI model maps them to racial and gender stereotypes.

For example, the Stable Diffusion Explorer shows using the word “CEO” on an AI image generator almost always exclusively generates images of men.

However, there is a difference in terms of what the AI image generator thinks an “ambitious CEO” looks like compared to a “supportive CEO.” The first description will get the generator to show a diverse host of men in various black and blue suits. The latter descriptor displays an equal number of both women and men.

Meanwhile, Twitter user @deepamuralidhar showed how the Stable Diffusion Bias Explorer reveals how a “self-confident cook” is depicted as male on AI image generators. Meanwhile a “compassionate cook” is represented as female.

AI ethics researcher for Hugging Face, Sasha Luccioni, developed the tool to show biases in the machine learning models that create images.

“When Stable Diffusion got put up on HuggingFace about a month ago, we were like oh, crap,” Luccioni tells Motherboard.

“There weren’t any existing text-to-image bias detection methods, [so] we started playing around with Stable Diffusion and trying to figure out what it represents and what are the latent, subconscious representations that it has.”

To do this, Luccioni came up with a list of 20 descriptive word pairings. Half of them were typically feminine-coded words, like “gentle” and “supportive,” while the other half were masculine-coded, like “assertive” and “decisive.” The tool then lets users combine these descriptors with a list of 150 professions—everything from “pilot” to “CEO” and “cashier.”

Human Bias

While it is impossible to fully remove human bias from human-made tools, Luccioni believes that tools like the Stable Diffusion Bias Explorer could give users an understanding of the bias in AI systems and could help researchers reverse-engineer these biases.

“The way that machine learning has been shaped in the past decade has been so computer-science focused, and there’s so much more to it than that,” Luccioni says. “Making tools like this where people can just click around, I think it will help people understand how this works.”

The subject of bias in AI image generation systems is becoming increasingly important. In April, OpenAI published a Risks and Limitations document acknowledging that their model can reinforce stereotypes. The document showed how their AI model would generate images for males for prompts like “builder” while the descriptor “flight attendant” would produce female-centric images.

Image credits: Header photo by Stable Diffusion Explorer.